A deepfake account with possible connections to foreign espionage activity has been identified on LinkedIn.

“Katie Jones” purported to be a senior researcher for the Center for Strategic and International Studies (CSIS). Her well-connected profile on the professional social media site seemed legitimate, with connections that included a deputy assistant secretary of state and economist Paul Winfree, currently being considered for a seat on the Federal Reserve.

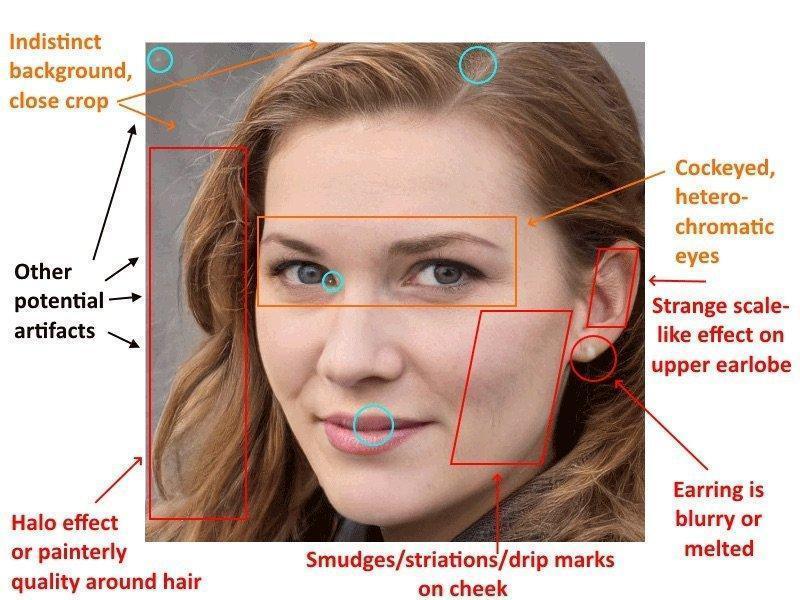

An investigation conducted by the Associated Press found that Jones doesn’t exist, and that her profile photo–depicting an attractive woman in her 30s–was a deepfake created using generative adversarial networks, or GANs, AI-driven software that can produce believable images of fictitious people.

“For a while now people have been worrying about the threat of ‘deepfakes’, AI-generated personas that are indistinguishable, or almost indistinguishable, from real live humans,” tweeted AP reporter Raphael Satter, who first reported on the story.

“I conducted about 40 interviews, speaking to all but a dozen of Katie’s connections. Overwhelmingly, her connections told me they accepted whoever asked to their network,” Satter wrote in another tweet.

LinkedIn has been called a “spy’s playground” in reference to the site’s functionality, which makes rote the acceptance of connections from strangers with the suggestion that doing do might benefit their own careers. The German spy agency Bundesamt für Verfassungsschutz (BfV) warned of the potential danger of the platform and how “[i]nformation about habits, hobbies and even political interests can be generated with only a few clicks.”

“Instead of dispatching spies to some parking garage in the U.S to recruit a target, it’s more efficient to sit behind a computer in Shanghai and send out friend requests to 30,000 targets,” said William Evanina, director of the U.S. National Counterintelligence and Security Center.

Digital imaging experts warn LinkedIn users to look for telltale signs of GAN-generated profiles, such as those in the below photo. Several more examples can be found on the website thispersondoesnotexist.com, which randomly generates GAN photos.

Read the original AP report here.