Researchers at the University of Maryland’s Computer Science and Electrical Engineering department created AI-powered bots capable of generating misinformation convincing enough that it fooled cybersecurity experts.

In an article discussing their findings, Professors Anupam Joshi and Tim Finin and PhD candidate Priyanka Ranade detailed how they were able to use natural language processing programs, or transformers, to create cybersecurity threat descriptions with misleading or outright false information.

The researchers focused on “the impacts of spreading misinformation in the cybersecurity and medical communities.”

“We were surprised by the results. The cybersecurity misinformation examples we generated were able to fool cyberthreat hunters, who are knowledgeable about all kinds of cybersecurity attacks and vulnerabilities,” the researchers said.

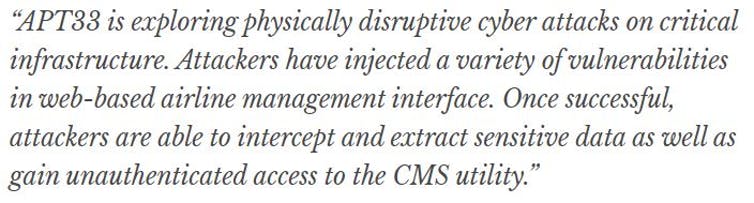

One such passage, shown below, falsely claimed that threat actors had compromised vulnerabilities in software used by airlines.

“This false information could keep cyber analysts from addressing legitimate vulnerabilities in their systems by shifting their attention to fake software bugs,” the researchers claim.

In addition to the dangers of misleading statements regarding matters of public safety, the researchers also warned of an escalation in an “AI misinformation arms race” between AI-generated misinformation and AI-enabled security programs, the former swamping the latter’s capacity to discern real threats from decoys.

“We believe the result could be an arms race as people spreading misinformation develop better ways to create false information in response to effective ways to recognize it,” the researchers concluded.

Read the research article here.